ASL-to-Text Translator Wins Bronze in InnovateFPGA 2019 Global Contest’s Regional Finals

PITTSBURGH (Nov. 6, 2019) — Technology that translates spoken languages in real time is a boon to travelers and the hearing impaired alike. But what about a language that isn’t spoken?

That problem inspired a team of students from the University of Pittsburgh’s Swanson School of Engineering to create a program that translates American Sign Language (ASL) to voice using machine learning. The project recently won the Bronze Award at the InnovateFPGA 2019 Global Contest Regional Final.

The sign language reader uses a camera and AI to identify the hand gestures used in ASL and translates them into sentences, which would benefit the hundreds of thousands of people in the U.S. who rely on ASL to communicate. The program could run on a smartphone, for example.

“The idea for an ASL translator was formed when our team was researching what kind of embedded AI applications can improve the experience of communication among different groups of people,” says Haihui Zhu, a student studying computer engineering and member of the team. Zhu notes that the Americans with Disabilities Act requires places like hospitals and other public services to provide human ASL interpreters. “Now imagine that a software that translates ASL into English can be deployed on a smartphone and executed real-time in an FPGA hardware accelerator. We believe that such a solution can improve the service of public facilities.”

In addition to being a useful tool for the hard of hearing, a key feature of the program is its scalability.

“I think the biggest challenge in this project was to design a fast and scalable machine-learning pipeline. On the input side, it is the video stream from the camera. On the output side, it is the English text,” explains Zhu. “To solve this problem, our strategy was to divide it into multiple stages: hand detection, hand keypoint detection, keypoint-to-alphabet, and finally, construct lexicons from the alphabet stream. To add a new sign to the ‘vocabulary,’ we just need to encode the hand motion of that sign.”

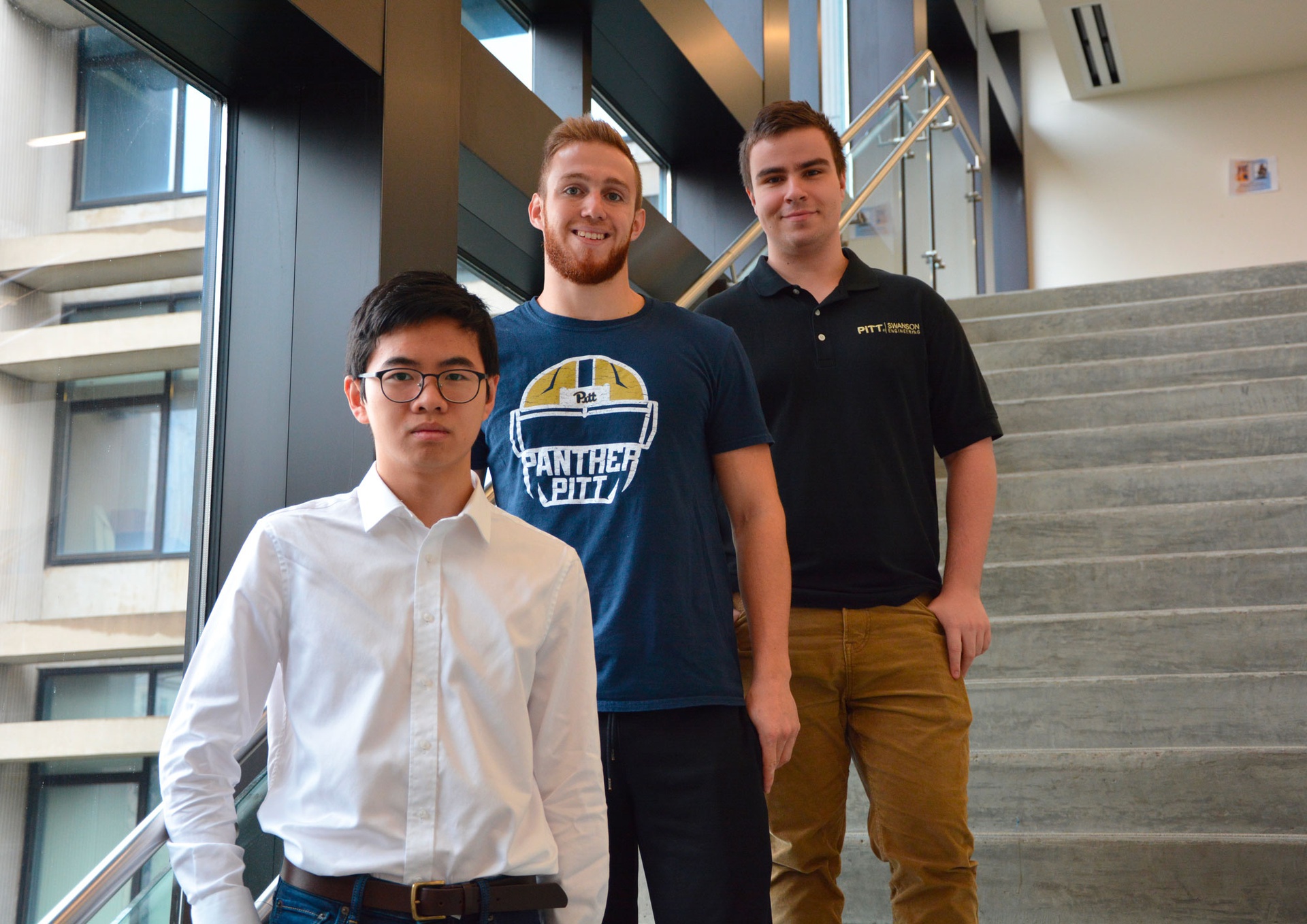

The team included Zhu, Christopher Pasquinelli, and Roman Hamilton, all undergraduates in computer engineering at Pitt. Though the competition is over, Zhu says they plan to continue their work.

“There are several challenges that have not been solved yet: one, our solution only looks at the hand motion, but to truly understand the sign language, we must look at facial expressions and hand motion simultaneously; and two, we want to improve the performance of the machine learning model. There are lots of exciting research and development tasks that we can further work on.”

Students who are interested in machine learning, speech processing, or the project will be welcome to join the team.

The InnovateFPGA 2019 Global Contest invited students, professors, makers and industry to showcase their idea of how field programmable gate arrays (FPGAs) can be used to develop cutting edge smart devices.

The team’s Bronze Award in the Regional Finals includes a certificate, a cash award of $800, and the Max 10 Plus FPGA main board.

Contact: Maggie Pavlick